In this article, you’ll learn how to implement stateless authentication in Deno using JSON Web Tokens. As we all know, JSON Web Tokens can only be invalidated when they expire, so we’ll include a persistent storage like Redis to serve as an extra layer of security. That means, we’ll store the metadata of both the access and refresh tokens in Redis to help us revoke the tokens when needed.

At the end of this comprehensive tutorial, you’ll learn how to:

- Generate private and public CryptoKey pairs using the

RSASSA-PKCS1-v1_5encryption/decryption signature scheme. - Export the generated private and public keys using the

pkcs8andspkikey formats before storing them in an environment variables file. - Import the PEM private and public keys from the environment variables file and convert them back to CryptoKey objects.

- Sign and verify the JSON Web Tokens (JWTs) using the RS256 algorithm (private and public keys).

- Build a RESTful API in Deno to implement the JSON Web Token authentication flow.

More practice:

- How to Setup and Use MongoDB with Deno

- How to Set up Deno RESTful CRUD Project with MongoDB

- Authentication with Bcrypt, JWT, and Cookies in Deno

- Deno – Refresh JWT Access Token with Private and Public Keys

- Complete Deno CRUD RESTful API with MongoDB

Prerequisites

- You should have Docker installed on your system to run the Redis and MongoDB containers.

- You should have the latest version of Deno installed. Run

deno upgradeto upgrade to the newest version. - You should have some basic knowledge of API designs and how to build APIs in Deno.

Run the Deno JWT Authentication API Locally

- Download or clone the Deno project from https://github.com/wpcodevo/deno-refresh-jwt and open the source code in an IDE or text editor.

- Start the MongoDB and Redis servers by running

docker-compose up -dfrom the console of the root directory. - Run

denon run -A src/server.tsin the terminal of the root folder to start the Deno HTTP server. - Import the Deno_MongoDB.postman_collection.json file into Postman to access the collection used in testing the API. To do this, open Postman, click on the “Import” button, click on the “Choose Files” button under the file tab, and choose the Deno_MongoDB.postman_collection.json file from the root directory of the Deno project. Finally, click the “Import” button to add the collection to Postman.

- If you prefer to do everything in VS Code, open the extension market and install the Thunder Client extension. Click on the Thunder Client icon on the left sidebar and click on the hamburger icon adjacent to the search bar. Finally, select the “Import” menu and choose the Deno_MongoDB.postman_collection.json file in the root folder to add the collection.

- Test the JSON Web Token authentication flow by making HTTP requests to the Deno API.

JWT Authentication Flow

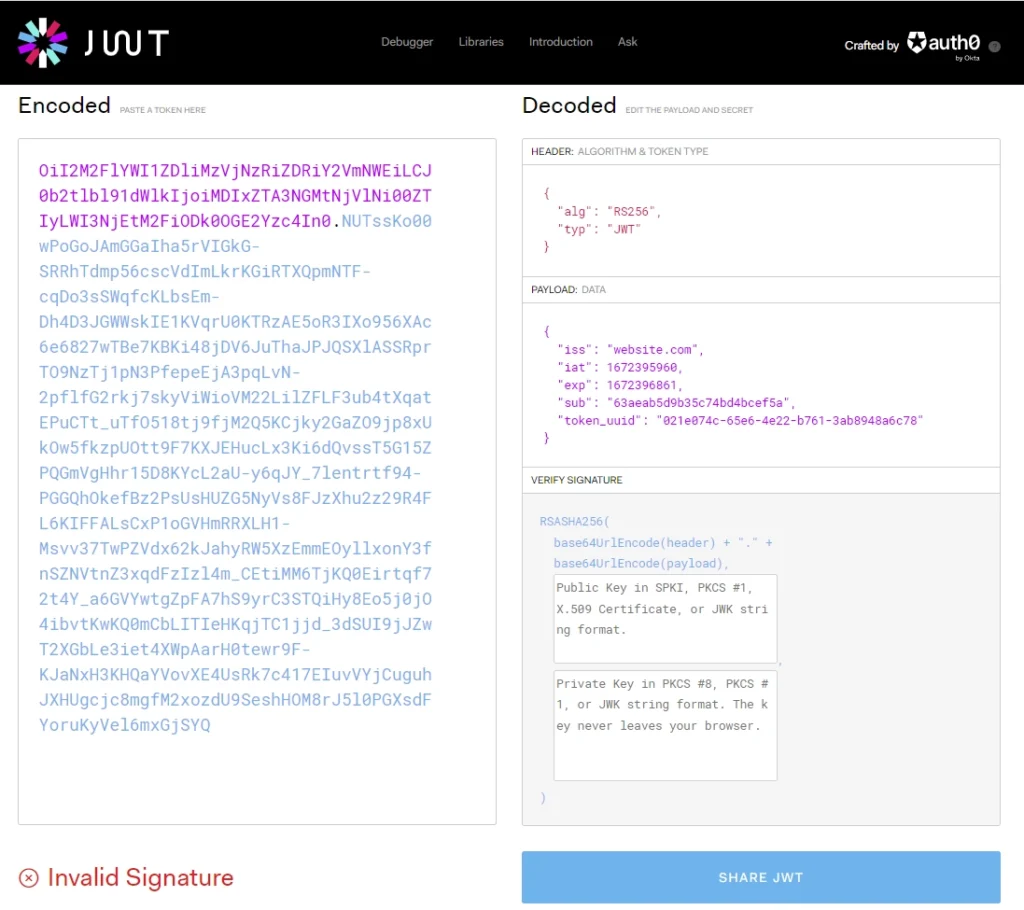

A JSON Web Token consists of three parts separated by dots (.):

- Header: typically consists of two parts: the token type, which is JWT, and the cryptographic algorithm used, such as HMAC SHA256 or RSA.

- Payload: contains verifiable claims, such as the identity of the user and the actions they’re allowed to perform.

- Signature: the result of signing the Base64-encoded header and payload, along with a private key using the algorithm specified in the Header.

- The user signs into the API with his email and password. The server then queries the database to check if the user exists and validates the plain-text password against the hashed one stored in the database.

- The server then creates JWT access and refresh tokens using the user’s info and the private keys. Once the JWT tokens have been generated, the server will store the metadata of the tokens in the Redis database before returning the access and refresh tokens as HTTP Only cookies to the client or frontend application.

- For future activities that involve authentication, the user can send the access token in the

Authorizationheader or Request Cookies object instead of logging in every time. - When the server receives the access token, it uses the public key to validate the signed part and extract the user’s info from the payload part. If the access token is valid and has not expired, the server will query the Redis database with the user’s info to check if the user has a valid session.

- Suppose the user has a valid session in the Redis database, the server will query the MongoDB database to check if the user belonging to the token still exists before allowing the request to be processed.

Flaws of Stateless JWT Authentication

In the Deno – Refresh JWT Access Token with Private and Public Keys article, we overshadowed the potential dangers and inefficiencies of using JWT tokens. The biggest problem with JWT is it can’t be easily revoked/invalidated since it’s self-contained.

JWT Invalidation/Revocation

The JWT can’t be revoked after it has been signed. That means the JWT will continue to work until it expires. Imagine you logged out from Instagram after posting an image. You may think you’ve logged out of the server, but that’s not the case.

Let’s assume the JWT sent by Instagram is configured to expire after 15 minutes or 30 minutes or whatever expiration time was assigned. When someone gets access to the token during that time, the person can still perform authenticated actions until the token expires.

Resource Access Denial

Imagine you are a moderator of a real-time chat application and you decide to block someone from abusing the system. You can’t block the abuser because their token will continue to work until it expires.

Stale Data

Imagine a user is a moderator and got demoted to a regular user with limited permissions. Again this won’t take effect immediately and the user will continue to be a moderator until the token expires.

JWT can be Hijacked

JWTs are often not encrypted so a hacker can hijack the token and perform attacks until it expires. This is made easier because the Man-In-The-Middle (MITM) attack only needs to be done on the connection between the client and the server.

Suggested Solution of JWT Loopholes

Let me clear the air. No amount of security is enough, hackers will always find and use vulnerabilities within your code or third-party packages to abuse your server. However, you can implement recommended security measures to make the process difficult for them.

Store Revoked JWTs in a Database

One popular solution is to store a list of revoked tokens in a database. This way, we can block the user if the token included in the request is part of the revoked list. This approach deceives the purpose of stateless JWT since an extra call has to be made to the database to check if the token is revoked.

Store JWT Metadata in a Persistence Layer

The recommended solution is to use Redis as a session store. This way, we can store the JWT metadata and expire them after a set amount of time. For every request that requires authentication, we’ll query the Redis database to check if the user has a valid session before calling the next action.

Setup the Deno Project

First things first, create a deno-refresh-jwt folder on your desktop or any convenient location and open it in an IDE. In this tutorial, I use VS Code. So navigate to the extension market and install the Deno extension.

After that, create a .vscode folder in the root directory. Within the .vscode folder, create a settings.json file and add the following configurations.

.vscode/settings.json

{

"deno.enable": true,

"deno.unstable": true

}

This will tell VS Code to prepare the development environment for Deno support. With that out of the way, let’s install all the required third-party dependencies. To do this, create an src folder in the root directory. In the src folder, create a deps.ts file and add the following module URL imports.

src/deps.ts

export {

Application,

helpers,

Router,

} from "https://deno.land/x/oak@v11.1.0/mod.ts";

export type {

Context,

RouterContext,

} from "https://deno.land/x/oak@v11.1.0/mod.ts";

export * as logger from "https://deno.land/x/oak_logger@1.0.0/mod.ts";

export { oakCors } from "https://deno.land/x/cors@v1.2.2/mod.ts";

export {

create,

getNumericDate,

verify,

} from "https://deno.land/x/djwt@v2.7/mod.ts";

export type { Header, Payload } from "https://deno.land/x/djwt@v2.8/mod.ts";

export { config as dotenvConfig } from "https://deno.land/x/dotenv@v3.2.0/mod.ts";

export { connect as connectRedis } from "https://deno.land/x/redis@v0.27.3/mod.ts";

export {

Bson,

Database,

MongoClient,

ObjectId,

} from "https://deno.land/x/mongo@v0.31.1/mod.ts";

oak– A middleware framework for handling HTTP in Denooak_logger– A simple logger middleware for the Oak frameworkcors– Enable CORS in Denodjwt– Sign and verify JSON Web Tokens in Denodotenv– Loads environment variables from a.envfile into the Deno runtime.redis– Redis client for Denomongo– MongoDB driver for Deno

Now let’s write some code to set up a simple Deno HTTP server using the Oak middleware framework. To do this, create a server.ts file in the src folder and add the code snippets below.

src/server.ts

import { Application, Router } from "./deps.ts";

import type { RouterContext } from "./deps.ts";

const app = new Application();

const router = new Router();

// Health checker

router.get<string>("/api/healthchecker", (ctx: RouterContext<string>) => {

ctx.response.status = 200;

ctx.response.body = {

status: "success",

message: "JWT Refresh Access Tokens in Deno with RS256 Algorithm",

};

});

app.use(router.routes());

app.use(router.allowedMethods());

app.addEventListener("listen", ({ port, secure }) => {

console.info(

`🚀 Server started on ${secure ? "https://" : "http://"}localhost:${port}`

);

});

const port = 8000;

app.listen({ port });

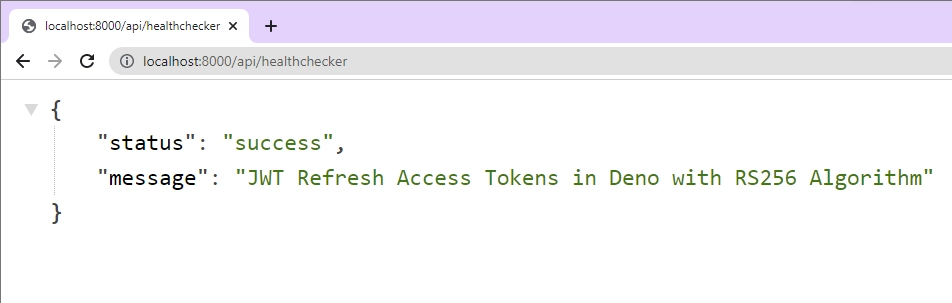

To start the HTTP server, open your terminal and run the command below. This will start the Oak server on port 8000.

denon run --allow-net --allow-read --allow-write --allow-env src/server.ts

Now open a new tab in your browser and make a request to the http://localhost:8000/api/healthchecker endpoint. Within a few milliseconds, you should get a response from the Deno API.

Setup Redis and MongoDB with Docker

At this point, we are ready to set up the Redis and MongoDB servers with Docker and Docker-compose. In the root project, create a docker-compose.yml file and add the following YML code.

docker-compose.yml

version: '3.9'

services:

mongo:

image: mongo:latest

container_name: mongo

environment:

MONGO_INITDB_ROOT_USERNAME: ${MONGO_INITDB_ROOT_USERNAME}

MONGO_INITDB_ROOT_PASSWORD: ${MONGO_INITDB_ROOT_PASSWORD}

MONGO_INITDB_DATABASE: ${MONGO_INITDB_DATABASE}

env_file:

- ./.env

volumes:

- mongo:/data/db

ports:

- '6000:27017'

redis:

image: redis:alpine

container_name: redis

ports:

- '6379:6379'

volumes:

- redisDB:/data

volumes:

mongo:

redisDB:

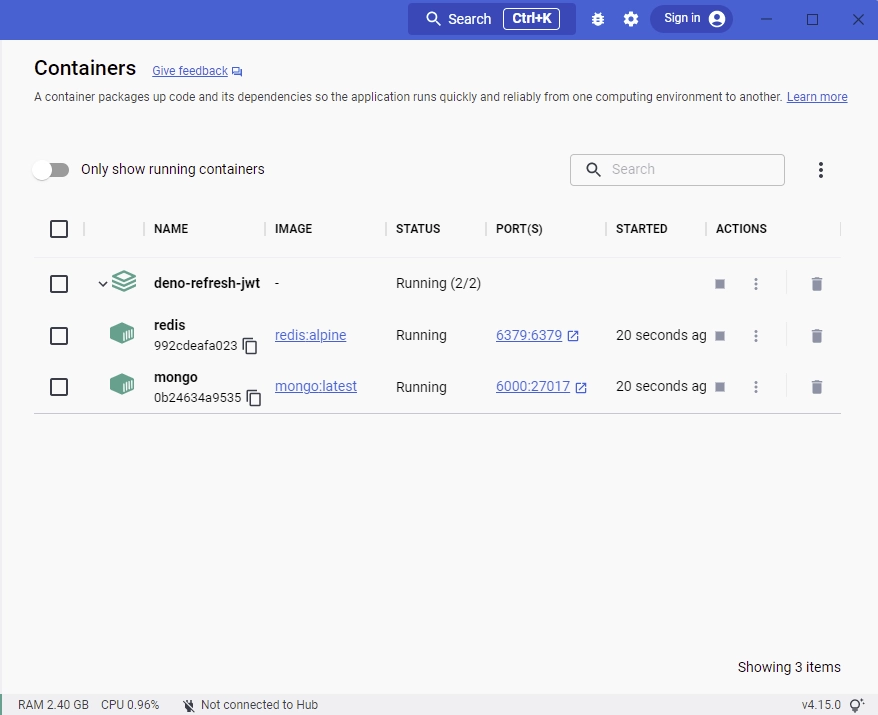

The above code will pull the latest MongoDB image from Docker Hub, build the MongoDB server using the environment variables that we’ll soon provide in a .env file, and map the default MongoDB server port to port 6000.

Then, it will pull the Alpine Redis image from Docker Hub, build the Redis server, and map port 6379 to the default Redis port.

Since we used placeholders for the values of the MongoDB server credentials in the docker-compose.yml file, let’s create a .env file to make them available. So create a .env file in the root directory and add the following environment variables.

.env

MONGO_INITDB_ROOT_USERNAME=admin

MONGO_INITDB_ROOT_PASSWORD=password123

MONGO_INITDB_DATABASE=deno_mongodb

NODE_ENV=development

SERVER_PORT=8000

MONGODB_URI=mongodb://admin:password123@localhost:6000

Now open your terminal and run this command to start the MongoDB and Redis containers.

docker-compose up -d

After the command has finished executing, open the Docker Desktop application to see if the containers are running.

Connect to Redis and MongoDB Servers

Now that we have both the Redis and MongoDB servers running in the Docker containers, let’s create some utility functions to connect them to the Deno server.

Connect to Redis Server

To begin, create a utils folder in the src directory. Within the utils folder, create a connectRedis.ts file and add the following code snippets. This will create a Redis client and export it from the file.

src/utils/connectRedis.ts

import { connectRedis } from "../deps.ts";

const redisClient = await connectRedis({

hostname: "localhost",

port: 6379,

});

console.log("🚀 Redis connected successfully");

export default redisClient;

Connect to MongoDB Server

To connect to the MongoDB server, create a connectDB.ts file in the src/utils folder and add the code below.

src/utils/connectDB.ts

import { MongoClient,dotenvConfig } from "../deps.ts";

dotenvConfig({ export: true, path: '.env' });

const dbUri = Deno.env.get("MONGODB_URI") as unknown as string;

const dbName = Deno.env.get("MONGO_INITDB_DATABASE") as unknown as string;

const client: MongoClient = new MongoClient();

await client.connect(dbUri);

console.log("🚀 Connected to MongoDB Successfully");

export const db = client.database(dbName);

In the above, we imported the MongoDB connection URL and the database name from the environment variables file. Then, we created a new MongoDB client and evoked the client.connect() method to create the MongoDB connection pool.

Lastly, we created a new database by evoking the client.database() method and exported the resulting object from the file.

Create the Database Model

Now let’s create a database model that we’ll use to query and mutate the MongoDB database. To do this, we’ll create a TypeScript schema type that has fields representing the fields of a MongoDB document and provide it to the db.collection() method.

In the src folder, create a models/user.model.ts file and add the following MongoDB model definitions.

src/models/user.model.ts

import { db } from "../utils/connectDB.ts";

import { ObjectId } from "../deps.ts";

interface UserSchema {

_id?: ObjectId;

name: string;

email: string;

password: string;

createdAt: Date;

updatedAt: Date;

}

export const User = db.collection<UserSchema>("users");

User.createIndexes({

indexes: [{ name: "unique_email", key: { email: 1 }, unique: true }],

});

The unique index on the email field will ensure that no two users end up with the same email addresses in the database.

Generate the Private and Public Keys

Here, we’ll use the Web Cryptography API to create PEM certificates, generate private and public keys from the PEM certificates, export them with a secure key format and the store CryptoKey pair in the .env file.

To begin, open the .env file and add the following environment variables:

ACCESS_TOKEN_PRIVATE_KEY=

ACCESS_TOKEN_PUBLIC_KEY=

REFRESH_TOKEN_PRIVATE_KEY=

REFRESH_TOKEN_PUBLIC_KEY=

Generate the Crypto Keys

Now create a generateCryptoKeys.ts file in the utils folder and add the code below.

src/utils/generateCryptoKeys.ts

function arrayBufferToBase64(arrayBuffer: ArrayBuffer): string {

const byteArray = new Uint8Array(arrayBuffer);

let byteString = "";

byteArray.forEach((byte) => {

byteString += String.fromCharCode(byte);

});

return btoa(byteString);

}

function breakPemIntoMultipleLines(pem: string): string {

const charsPerLine = 64;

let pemContents = "";

while (pem.length > 0) {

pemContents += `${pem.substring(0, charsPerLine)}\n`;

pem = pem.substring(64);

}

return pemContents;

}

const generatedKeyPair: CryptoKeyPair = await crypto.subtle.generateKey(

{

name: "RSASSA-PKCS1-v1_5",

modulusLength: 4096,

publicExponent: new Uint8Array([1, 0, 1]),

hash: "SHA-256",

},

true,

["sign", "verify"]

);

function toPem(key: ArrayBuffer, type: "private" | "public"): string {

const pemContents = breakPemIntoMultipleLines(arrayBufferToBase64(key));

return `-----BEGIN ${type.toUpperCase()} KEY-----\n${pemContents}-----END ${type.toUpperCase()} KEY-----`;

}

// Let’s use the new toPem function to create PEM format strings for the privateKey and publicKey

const privateKeyBuffer: ArrayBuffer = await crypto.subtle.exportKey(

"pkcs8",

generatedKeyPair.privateKey

);

const exportedPublicKey: ArrayBuffer = await crypto.subtle.exportKey(

"spki",

generatedKeyPair.publicKey

);

const privateKeyPem = toPem(privateKeyBuffer, "private");

const publicKeyPem = toPem(exportedPublicKey, "public");

console.log("\n");

console.log(btoa(privateKeyPem), "\n\n");

console.log(btoa(publicKeyPem));

Let’s evaluate the above code:

- First, we created two utility functions: arrayBufferToBase64() to convert the array buffer into base64 string and breakPemIntoMultipleLines() to break the base64 encoded array buffer into multiple lines.

- Then, we called the

crypto.subtle.generateKey()method to generate the CryptoKey pair (private and public keys) with a 4k modulus. Using a 4k modulus will enable us to sign and verify data with the existing signatures. - Next, we converted the CryptoKey pair into PEM certificates and exported them with the pkcs#8 (private) and spki (public) key formats. Exporting the PEM certificates will allow us to store them on the filesystem or an environment variables file.

- Finally, we converted the private and public keys into base64 before printing them in the console.

We converted the private and public keys into base64 encoded strings to avoid getting unnecessary warnings in the terminal when Docker compose reads the environment variables from the

.envfile.

Now open your terminal and run this command to generate the private and public keys.

deno run src/utils/generateCryptoKeys.ts

After the RSA key pair has been generated and printed in the console, copy the long base64 encoded private key and add it to the .env file as ACCESS_TOKEN_PRIVATE_KEY. Next, copy the short base64 encoded public key and add it to the .env file as ACCESS_TOKEN_PUBLIC_KEY.

Now let’s repeat the above process for the refresh token’s private and public keys. Clear the terminal and run the deno run src/utils/generateCryptoKeys.ts command again.

Copy the long base64 encoded private key and add it to the .env file as REFRESH_TOKEN_PRIVATE_KEY. Also, copy the short public key and add it to the .env file as REFRESH_TOKEN_PUBLIC_KEY.

In the end, you should have a .env file that looks somewhat like this:

.env

MONGO_INITDB_ROOT_USERNAME=admin

MONGO_INITDB_ROOT_PASSWORD=password123

MONGO_INITDB_DATABASE=deno_mongodb

NODE_ENV=development

SERVER_PORT=8000

MONGODB_URI=mongodb://admin:password123@localhost:6000

ACCESS_TOKEN_PRIVATE_KEY=LS0tLS1CRUdJTiBQUklWQVRFIEtFWS0tLS0tCk1JSUpRZ0lCQURBTkJna3Foa2lHOXcwQkFRRUZBQVNDQ1N3d2dna29BZ0VBQW9JQ0FRRFRvdDNVWjhYWjcyNW0KS3VsWHNUckhNVndvREYzQ21ibWpxZG5RNmxvMHA2WjdWd1VBc0NKS1VQUXMvWGZjVjVobHRGeEVhL3NLZWkxcQpTRWJqTFFWaFpGQTFINkpObTltWHZLQTFUQXpCeWNDajFKbUd3d1hobGxxRGhNWkdsUk90SnlVVEk1akZSOThQCkhqcWVQeDg1S1JlMytmVUplMEZKVHpjNWNYYjRDY1RmRkJlZWpsb3VwZ2pRbkVuRUdYRCtaeTNxemxxY0gwcHgKWWhoTFNvb0MxN0lUcWhvY3BKSEVvMkhZRzBHK2UyU1VEZnViL3JmK1ZOUXlpOFVKRDBsMUszSGQyUm4zbmJJRAo0cGpHTkdtMkdySkFLeVpOOFh3VjByNTRWVituRkd3ZjhCN21kckZzN1RCakZPYnI3MVVTRGtERE8yU0JHQ1FiCkZOeU9RU1V5ejUxK0h6QWZRUjZWNUplcmFJZnBXa2x3a3ptNW90K1VEeXJUcy9uYVpFNTFTazdVVUg1dWI1SFAKY0ZFT3ROUTBCcXpOdithY0lDbzcrcGJqSzRWcXBzZGxhMy85aWI2QkpJajhweDIza2FrY1dMU0JEL1pqQ0E4eAo1Z1dqWjI5dFhrZWdUNzJvaStLQUUvRWM4djZoanM3c2ZoSVpDQTJJMkJaYnVxZ0pNMzVnaHkwVzFkckhGSHN1ClVVWjdTTGg0T1Z6SnI2ZG4yUHdvSjIxY2tSdTF4YkpmbU1DWklhRDFXTGI3aU5zeklsTjQ1Q1Y0Z0NqL25zb1QKL0YrQ1VqTHZISExOMlRCN1VpUDVPQWVSVEFmYndtQ0JTOS9jMmh0UzFjUnI1ZEs1VVU5ZmdXN3lldTgzM0loUQpQSEJHME9jMnlOMmR0dnBEclFEUUlndjdSRHdlSndJREFRQUJBb0lDQUgwNlF0NmJaUFEyKy9GU2RPNWh2WEQrCllSU0ZkTGxnY3Z0SDFzNEt6Y09ZYkNkUmIzRmZ4M3FIK21QZ3U1clM3aWRJR015WHhGMEh2SFhHUE1QUjhQd1cKK21ya3hBbitMVHZlN2tGN05aVTVoMWFweHdwNXZiSWxZSHV3Qmc3ZnlWdk03T0F0VVFsekpLYnljU2NRSEs2YgpCU0Rrczd5ZmhSc1cxNHVTK3gxNzBsVlpzendyNldydTdncGFZRCs3K2lOZTlFbWJQdnhnZTVFcHhVeFAxK2drCnI0ZDVRS2d3TE56WS9GMStpMkZsN0RhN0syVzd6QzJmRGt5MmJhbVZ0UmF5MVZhN1R1VTVGNHU2K2tGVjVETlEKQ3Fkem9OL0FBM2Q2VXlBckRFVjJEU0M4MmR2dTRiK2RmZU16REEwUmVob0wrd0JLMVlhWnZVdVZCWWpiUFdHRwo1MWh6bFpSMlJOejJPU1JTRGdqVXdSWDd1MVY5VldNWG50NEhUY3lGSjlHVWRZMDdtS1EzOXUvN1FQOHpPQVp6Ck9CazdBM1QxU1Z4bHg5d0lZeHRUUFVCaXhSbDR5TXBTcitpYTlkeTMvSHVpVkNwVnRnYXJaNHN5K0xsTnNRWisKc01MVDZTWkoxMnNBNy90YkFRTkZselNCN0lZbTdNME1vZ3pQeWJpN0txeGp2MnVON0RQZE5Kb0F5bzUvRE15SgprNjlXaEdJMElYZDJDQU5XNWlzT2NiYkh4U3UrbDRuTitBbUtxd3hYWmVMQjFFaEh2ZzBURS9QSTJKa2JZN2FnCnc5VTBPQzZBOTNBMTBsR25NQXJySzVwaGlHYm9Kc1hpa01IekQ2cTRuWUN1M3FBQ29CTlZ3TDZ6eXMybEc0WUIKdmVVSXl4RDVydVV1UHBkdnhsdlJBb0lCQVFEdjhLc1Q4eGM5MW5WRmo2UFQzd2o5b1NpTzFheUNWYkNVOFdCZQpQTUxrNlM4eFFIUkc1NUNlV25jRDBEOXJuUzVHbDlnUzRiYVo3ZFZEUjZtbDB5MGZ2ZzRjMzV5ZjZyUmpiUlJVClV5ZHVYVCtzQlZMTkMrQy9tODk1MGRsK1JJSDREMHFHRlhaLzhTNGFCaUlNQ1ZDa0poU0ErT0dDeTgvbE11ajUKTW1IUis4bE13Zmp3NUNYQXJWYnpRMHpUZ1lYd2Z2TXkzSjlOejgyRXdzL0pORDl2U3Rxb2EreVg4cTI2UFlyOAp1VDlyb1hJbFVmYm5XSmJ2ZXlaZVc5ZXdOendvNVNNeHU5YVU4alYrVVlYaEFnWm9PM3Y3NloxRmVoUmxzM25vCkthc2cxZWs2MlVBaXZzaVoxbktQYVFMemtpYVNXVnM5eEJObWI0YTVnS3Z5MHJndkFvSUJBUURoelRjMWh3aXYKNm15T3pkM2dyaW83OTV0WHRvZHkzZXhLV0F0VHRONFNSdWlhL2luelJ1eGpydEhsb0xtYTd2MHNZRVJKTVhHYQpnQjhpaXUvRXZ4R29mVGhIcHU2cGE5aFptK2I3OGY3NHZkMnk4MUV2SjhTM3dEUEhrd2JpMzg1cDk5Q3JvSHZPCkxreWxmM1Btd0UzYkxRUzlUU0Z5M3pBL21PMXV3a21QbXhYVVJrV1JOSHpkVVN1YzlmTTlBZVkvWXFRWXgvUUMKM2hBVXpaOE43NnpCbnZ2VUxQWmxwNHNwa0FvekV3aFR4VENEMnNsM3FMd3NXcDBnV0c0a3A1b3Q2Zy9ETkxKTgozZXlYK3dIb2xlWEJadW5VVVNsV0lQMVB4aUl3ajRDZU9zNGJPd2hFb294d3VnSlpzQVJDZGEvdlROa2FLMGNlCldHcG0zTCtJNFFPSkFvSUJBQmQwQ09Uc1VBdEZXVFV4Y3l3VWt3Wm5xRlU5NFp6anoxemZzekhDOHJINWNSbDUKV1dSTTRqLzRTOFhkcHpWWHFkeFFuMWhKSTlZci96cVNXS3pTMVloU3hZSmhBU2hJZ3RWdEpoMlArenk0ZEs3VgozbUFZbHlGams0WXUwdm1hckxHWW5RbzZNdGtTdEJUcklJellwRDlIVVozQnRobFkzcnRpbkk4dk00eVk5ZlpBCng1cVVVblJnL1N6T0dVWmJWTUpMUm01a1RsWUd4K29BT050TDloOWt5N2JHeGR1Y1p3cmJWU2lhMnU0a1c4bjIKRnhKS0FJYnNITFlBZURiTFQyQVg5YmE0eTZMSGdoOFV6T2RQa1Z6QzQ3MmQramQrVlZ3VGpRajZlYlc5OHd4RApqQmRaV3JaZTFkZmF4ZVVWRmh3Y0MrVWZzMTNCN1FOWTVuWFh6eFVDZ2dFQUs5ME1tNFpXeHEyWVZ3bGd6N09sCm1xNlg2NnNXbHRiTGZ3bXBjYUpSL1dUdTdLVHhDMFE4eVlSOVc4a2tKUmZGOEtmbXUvMHgzMXlDTDlpamlTbkEKeVdWQjJKRnlEVkZZM3RkdFFJWWJETUQ5WHpUckVXajlTdUM0YmsxK2FmWW1CK25QREhnSmROMERvS2Fvb2l1NwpOQmVEc3k1WGtCUVJNRm1KemhsSjV1NnVoK1Q2d0tGY25EV1hiazlNNkE0RlowekhLZFUxN3BTcXRRL1lsUUY1CklzZTZqZFlLSzJjbm5uUlB0dW84bE9GYWNsSy9EbEtsODB2SytDeVZnT05hRFE5SjdwYS9DR2RTL1pjU0lOZDEKb1dOWGl4b1ZHSmtoL0N3MkdnN1dZbVowQVZBdlkvM2JvRTVTQkpBdjA2VSsveEtEbmhUSUpQbngrWGRxY2JHYwpXUUtDQVFFQXBFWTdBM2h5UFl4NmtiWTc1b3o3UmRSdkltaEJ1d1FxU0d1d0NGSU4vaWMyME13S0x1M3ZYM2JzCmNqcTRVTmR0NmhkSUNIbklaRUhuSWhxYW1ZaURXNTNEMWVIbW5KL1E1Y1AvUWtRT3hFWTc4MXljaWNhZ1JSb0YKczMyVHYzZkwydTJIcGtBS1B6NWc2RWszMUtmVncrYyt4YmN1MzNWNlFieU43ZnBObnFSelNMQ1FpQzhoTENTbgo0MS9ES3FObHFaZzVMVzhzSzF2Y1RVcXl0OEl3RlRMVC9abEVYaXRSVkdvUk5LYTJPUVdiYkxtMnFaQlV5UnpzCmxFcVh1a1hSM29LTCs4ek5YWUhnUGk1TlowUy9QZXJzSVovTWVkMUx4N1F3U01aazhsQnJVQWdxdTNnL09wNkkKaThSQ2hSaERWZTZYOEw2RUhTVGdPVGRJdXl6bXB3PT0KLS0tLS1FTkQgUFJJVkFURSBLRVktLS0tLQ==

ACCESS_TOKEN_PUBLIC_KEY=LS0tLS1CRUdJTiBQVUJMSUMgS0VZLS0tLS0KTUlJQ0lqQU5CZ2txaGtpRzl3MEJBUUVGQUFPQ0FnOEFNSUlDQ2dLQ0FnRUEwNkxkMUdmRjJlOXVaaXJwVjdFNgp4ekZjS0F4ZHdwbTVvNm5aME9wYU5LZW1lMWNGQUxBaVNsRDBMUDEzM0ZlWVpiUmNSR3Y3Q25vdGFraEc0eTBGCllXUlFOUitpVFp2Wmw3eWdOVXdNd2NuQW85U1poc01GNFpaYWc0VEdScFVUclNjbEV5T1l4VWZmRHg0Nm5qOGYKT1NrWHQvbjFDWHRCU1U4M09YRjIrQW5FM3hRWG5vNWFMcVlJMEp4SnhCbHcvbWN0NnM1YW5COUtjV0lZUzBxSwpBdGV5RTZvYUhLU1J4S05oMkJ0QnZudGtsQTM3bS82My9sVFVNb3ZGQ1E5SmRTdHgzZGtaOTUyeUErS1l4alJwCnRocXlRQ3NtVGZGOEZkSytlRlZmcHhSc0gvQWU1bmF4Yk8wd1l4VG02KzlWRWc1QXd6dGtnUmdrR3hUY2prRWwKTXMrZGZoOHdIMEVlbGVTWHEyaUg2VnBKY0pNNXVhTGZsQThxMDdQNTJtUk9kVXBPMUZCK2JtK1J6M0JSRHJUVQpOQWFzemIvbW5DQXFPL3FXNHl1RmFxYkhaV3QvL1ltK2dTU0kvS2NkdDVHcEhGaTBnUS8yWXdnUE1lWUZvMmR2CmJWNUhvRSs5cUl2aWdCUHhIUEwrb1k3TzdINFNHUWdOaU5nV1c3cW9DVE4rWUljdEZ0WGF4eFI3TGxGR2UwaTQKZURsY3lhK25aOWo4S0NkdFhKRWJ0Y1d5WDVqQW1TR2c5VmkyKzRqYk15SlRlT1FsZUlBby81N0tFL3hmZ2xJeQo3eHh5emRrd2UxSWorVGdIa1V3SDI4SmdnVXZmM05vYlV0WEVhK1hTdVZGUFg0RnU4bnJ2Tjl5SVVEeHdSdERuCk5zamRuYmI2UTYwQTBDSUwrMFE4SGljQ0F3RUFBUT09Ci0tLS0tRU5EIFBVQkxJQyBLRVktLS0tLQ==

REFRESH_TOKEN_PRIVATE_KEY=LS0tLS1CRUdJTiBQUklWQVRFIEtFWS0tLS0tCk1JSUpRd0lCQURBTkJna3Foa2lHOXcwQkFRRUZBQVNDQ1Mwd2dna3BBZ0VBQW9JQ0FRRFpqbkxqYUdCUUN0VmUKbFpIZGF2bHBvWVRaOExJd1VDeG02TWhPQzVJQzdoV3dBVzdSUFdvb2xiZjRzUXZMQUx6ZEVJV05iblloWWlZcApUZzlNRmE3eFdBcW9tMUMrRFRMa29LQ3BqNExmcU1WVnl2ZlMzcGlKZXpUOEl5VmhjMC81dzg3RlF5dHdkS3o1CmpnMEZPbEh4c2lwclU2VTJ0SlBwdTFSUVh3eFRsNVRpWWhEeGhwWUc5NXAyZDl4V2tyUzFnell1ODVLQkMvU2MKRWJicW90ZHhuMUxxSDlCakR5UVM0MVBXNXphUExNdko1d1NIT2xqWUMvdlNnSXN4N0oyTFcrZzFybmtuamw4eQovbUR0RkJsZC9qYnRIZStZVUZLby84cXpkak80ajZHUUh1N2gvVGFXT1dJYjdUWlBsdnB2TS92djljeHVYUmcwCnFRYjA0L2dIbG03dkxIM2ZicnZjUGFrZzBNdm5VVVdoaVRWc3lCdDdMZUxrMzhmeFBxUFQwQUhGT0YvcFdOMVcKaWd3R1d6UDYxUzRvNkwrU0RmZFpBS3c1R3BMRzZ2M3Y2aFhranVBdUY3TjVTMWRYOXNVbml4OTdZNUpLSmptbQpYb2ZYNjYvZ05GdUhtOXZqenVENnB0MHB6R0taVGl0VWIwbVUwT3h2UCtWVmlXY0VLYmo1MEkrNm50OVptWXZqCkd4L1JTTC9lcDNjVDU1d1FYUGJrWWFpeklCU3o0WTM4dmI3SVRQdlBNbTYrSTJDMnl6OG5lODFRdEhxMXBwN3gKT2VZUXV3ZlN0U2s4RkN3LzZ4LzZLMWl3RXhvVXZ5TUhPd1FwTWVhNFU2WVl6SFlWUXU2STNNNjFPdmFMQS9wZgpwUmdrVEZvM1lXSmdJQTIyZXRYZEhXS1gxNTBMbVFJREFRQUJBb0lDQUJmUmI2U1QvYzlsa0R5ZHRXMS9VN1VMCjJPYWZZbkxGcEViVC8zSUQ5RVZiMllYK2NpcDRSZElScWlXUkJKQ0NFU3RHcnNod0tvQzNKU1JxaG1RM0Q0TWUKdDNRRUpRL1psQlBSdmlVeU1BcmFpRmcvTTJpanRDR0JHcWpzRkNDYUpreGE4cDFJSUU1Y2g3OStuTkJRdFQzMwoyb3NMaWsvMTd5ZXN1YXRlN0pPT1NkK0xDdjNXVlVDSUJTSXBOemRITW4rWTBPck5BZUljOC9mT1BLOXRpcGJHCjhhWHVPN3RNb2c5clVmZDZNcy8wQUkrbk9paXY2NkFqbDd0UkZXd3Uwb1M2V0M3Z1hpVkZnZ2lzTHVKbHkrMHQKY3NmOCtnazc2Z0RVbUZXVGdGQVpjWCs4MHp4c28zaEk2Z1BTc1pwL0ZnWHN0QTF1WktaRWpPemZBSUw1SG1OSwpsRmxYRmxpbDhDUDBreFppQTRqMGtpNUZodzRFTEkxdUFJeEY1NE0yazczVjVLdDgycVd0NTNJbzRjTi9xUzVBCnRCbnJjdWJaQjJmaDZDYkhmNTN4ZjNnZm5JMzlNbG82VzdCY2JiMWJGb1ZTbm5CYXhoWGtJZHZRVUV0Z2JZY2cKSlo2SGdwN1B2Y2k4azkzSUtCeW0ycmtvNGNTYitiT0VLTmFhU0drMWVRd0NNZU80WlNadjJDS2Q4bEdMem1CcgpTU1BtTkVPTEdBSEphRi81MzZ1aDRyQVk1RVBOc0N6VUpIa2VYbGdGMTlDNVlrQVVZTVVBeFFRSlJXRUY1VVYwCkxtT2lKOGVPMFI3d2J2Q2RaUGtGazB6cERJS3hrNVNVc0hDU0lFVE5JS3Y2V3ZXT0JLbmFuWStCVzFwRGpJN28KV1o4RktBckRIN1Q4S0txbHFrSHRBb0lCQVFEeWYyTmpRVDVzbkVON0xXUkZsVVBMaTlBNGpwVU1VUWlhakxCQQpqUUFuQ2JqYUs5TzhhNWZwRTEwaHVUY2o1WFZWeEErUzdpMXdpVzQ2VTdCQmJqWlVkd1hIZEl6cnAxZ3Bib2NFCklWL0VDelFiMGRHL2M3NFY1SkFVVVJRenc3QkxXNVcyWXoyc20xTkhoQWtwK3FtSWcrbmhCeWZ2b0hhVTMreXAKZFhoWnN4aXByT1ZHMVEwOEJRTG15anRYUzNVZlUwZytaQU1tblg1MFIvZWpKY0RqdXpqN0FLejUxY0xrazZLMgpjWnZvVGExUWpiNjJCKzhVQUtPdHRxejdJZG9pSnVoMjRTYXExZHBKVVhRTUVueFJIUDV0YUlsdXVZR3ZBYm1DCnphMUsyb3MvVjk3K1ZtMUlxQy9TTjZ0WXRmdmg4SHZBWHlSUCtsWlh0UTloUHFZWEFvSUJBUURscTRzOFBpd3YKQkFVekh5OS8xYW5ETWxreFdXWWZQcXR0eEYrMDVUTXExYllBSVFqeFRKc1BNME1TNk4wVWg5eUdKUzRDZWlWdAozQkFNZEdsUjhIVUE4YTVWYWVBRFpzK3llVG9Qbm1BUk1TMU5SaEJheUVsRG5OY2hrampVQ3BvYWtWQ0RmWDFwCkpWakxIVDZhcy9ueElrS1N1TlB1YzQ3WkNQTk9nMzVDTUNSNGFzK052ZzZ0QUEvNTdldGE5aXVhdFhmV2RHVUIKczlCcVpKSnlvbmhYWnlmMnJjL21ieU1GUmV3TWd2dDdYeUsySmppcWR0MSswTXZYdENSeHlSZ0hBakRGbUswTQp2b1VEcmFSRlZ6ZVRndHdEVTMxSy81d0kxRWZLVy91OU9jNmYwa0Ewb0I3bU1XU2gyNzJhUERYbVFSSEVGT2NLCitxS1NQWlB3ZDVuUEFvSUJBR0lJT2FlZ2NwbjV1aFlMemFPTHFqS1pQUDRBTmlVYWhUM2xia05LUFN1SzlKM08KWmZTZ0VuTjVEb2RabHY3OS9pZEQ4WC9XcGF2L0F2NjFZbVd4Sm1tVERGVUx1d1J4VEdURGQvV2xnRStDci9nbgpKSUlmU2xNVGFXT3RPMXVKMnJVOE94UFdudEl1b01ZaWpJblorYnRraUtJZUFIa1JCNTg3dnpMcWVGTGE0amVGCjI5Sjh3ckxtMjd0dE9md2FWeWpveENYa3pKbEp4aHRBRk01eHJyN2hxekZkbnBBSmFKWjdVS1lzMjNoWUhwNlkKRHVjTDRneldEVlZtcWh1RUhlajhqYkd4WjY1Y2NiaCtJMG5XRjBlN1R1ZndBTTh3VTBycWlaSmxqNDdaTnIzTwp5aWxMeXpZNk44cm1FbkQwY1BWd0FMZE9QeUhOOUNYVTNualRtTlVDZ2dFQkFPTnc5MGpvZFE3MlYwUGlIVUxtClQrRExTb0xSZW8xMG5ZWHRrNjNyMExrWnZNdng2dzR6QTllUXQxclJtcWFMU1ByYmRPM2xFbzN5QVQ2a1JleHMKU1NKdk5HckhsNTBtd29hSEFOV1l6S0FaNkRmL0s1RUxpV3BZdHI4N00rWGd2ZTJUZkgxSzE5ZzVzTzRzZnVQcgpXWmpQaWNnTkcydW5xbzRLREJEenJTUlUwcmtoWlh1RC9McWNOallXeEIxbmJaVWZJcGNRMnpwTlhSY1BrK3ZNCk00cXkwR084aXdjemhpWGhzYnBPT0VkYjFsODJDS1hmWXNnRWMrbWdMdnN6M3dTSnljelV2b0xCWmE1WDFqY0oKQVRPbXdzVFVlRjYrTlVLVkhxY3FZbWxwQnRORS9tcGZLMXBoRGJ3d2hWcHBTQ05HeXhZNGNQbHhiVytQWmFNYwpmZ2NDZ2dFQkFMV1VFUnRYRnYrZGtCcXVJS2JvU0duOWduUHV6eitTWXBQbGQyTjNHdThjeSsyMnRHS21ZQUFpCkJuNmJWc2ltMWZSR1FhTzhzT2U5cGQ4T25pS2YySFVVZDl5WElQeTc4S1lDRGdLaXM1T2xrOHUwYmRPaFNmY1gKQVFJYzJld2ZBNVJ5U1hxWkZQWjU3ekc1a241aUE1alp4dDl5WDd0T2lZNVREdkx6NTFpWUFZbXZhazkwcW1YTwpSN1hqckx0NUlkZE56ZzVOTUFZMTJHZGVOL3NUTHdtQzZQWWpDd244MXlUQ0RXTTNrMUFRc3BUTDhDOGZxL2paCndNQkszd3BDR3h6d1FUTHU4U3lNc1JOZDZHOFVUbkdjcEVERlBtOG14YndjQVI5VGg3Q1A5MjJyTHhwWHNmVkMKTWpTbmoxRkQ2azB6eVBvME1tZ0RzcCtBUUlLY3E2TT0KLS0tLS1FTkQgUFJJVkFURSBLRVktLS0tLQ==

REFRESH_TOKEN_PUBLIC_KEY=LS0tLS1CRUdJTiBQVUJMSUMgS0VZLS0tLS0KTUlJQ0lqQU5CZ2txaGtpRzl3MEJBUUVGQUFPQ0FnOEFNSUlDQ2dLQ0FnRUEyWTV5NDJoZ1VBclZYcFdSM1dyNQphYUdFMmZDeU1GQXNadWpJVGd1U0F1NFZzQUZ1MFQxcUtKVzMrTEVMeXdDODNSQ0ZqVzUySVdJbUtVNFBUQld1CjhWZ0txSnRRdmcweTVLQ2dxWStDMzZqRlZjcjMwdDZZaVhzMC9DTWxZWE5QK2NQT3hVTXJjSFNzK1k0TkJUcFIKOGJJcWExT2xOclNUNmJ0VVVGOE1VNWVVNG1JUThZYVdCdmVhZG5mY1ZwSzB0WU0yTHZPU2dRdjBuQkcyNnFMWApjWjlTNmgvUVl3OGtFdU5UMXVjMmp5ekx5ZWNFaHpwWTJBdjcwb0NMTWV5ZGkxdm9OYTU1SjQ1Zk12NWc3UlFaClhmNDI3UjN2bUZCU3FQL0tzM1l6dUkraGtCN3U0ZjAybGpsaUcrMDJUNWI2YnpQNzcvWE1ibDBZTktrRzlPUDQKQjVadTd5eDkzMjY3M0QycElOREw1MUZGb1lrMWJNZ2JleTNpNU4vSDhUNmowOUFCeFRoZjZWamRWb29NQmxzegordFV1S09pL2tnMzNXUUNzT1JxU3h1cjk3K29WNUk3Z0xoZXplVXRYVi9iRko0c2ZlMk9TU2lZNXBsNkgxK3V2CjREUmJoNXZiNDg3ZytxYmRLY3hpbVU0clZHOUpsTkRzYnovbFZZbG5CQ200K2RDUHVwN2ZXWm1MNHhzZjBVaS8KM3FkM0UrZWNFRnoyNUdHb3N5QVVzK0dOL0wyK3lFejd6ekp1dmlOZ3Rzcy9KM3ZOVUxSNnRhYWU4VG5tRUxzSAowclVwUEJRc1Arc2YraXRZc0JNYUZMOGpCenNFS1RIbXVGT21HTXgyRlVMdWlOek90VHIyaXdQNlg2VVlKRXhhCk4yRmlZQ0FOdG5yVjNSMWlsOWVkQzVrQ0F3RUFBUT09Ci0tLS0tRU5EIFBVQkxJQyBLRVktLS0tLQ==

Convert the String Keys to Crypto Keys

At this point, we know how to generate private and public keys. Now let’s write some code to convert the base64 encoded private and public keys back to CryptoKey pairs.

In the utils folder, create a convertCryptoKey.ts file and add the following code:

src/utils/convertCryptoKey.ts

function removeLines(str: string) {

return str.replace("\n", "");

}

function base64ToArrayBuffer(b64: string) {

const byteString = atob(b64);

const byteArray = new Uint8Array(byteString.length);

for (let i = 0; i < byteString.length; i++) {

byteArray[i] = byteString.charCodeAt(i);

}

return byteArray;

}

function pemToArrayBuffer(pemKey: string, type: "PUBLIC" | "PRIVATE") {

const b64Lines = removeLines(pemKey);

const b64Prefix = b64Lines.replace(`-----BEGIN ${type} KEY-----`, "");

const b64Final = b64Prefix.replace(`-----END ${type} KEY-----`, "");

return base64ToArrayBuffer(b64Final);

}

export function convertToCryptoKey({

pemKey,

type,

}: {

pemKey: string;

type: "PUBLIC" | "PRIVATE";

}) {

if (type === "PRIVATE") {

return crypto.subtle.importKey(

"pkcs8",

pemToArrayBuffer(pemKey, type),

{

name: "RSASSA-PKCS1-v1_5",

hash: { name: "SHA-256" },

},

false,

["sign"]

);

} else if (type === "PUBLIC") {

return crypto.subtle.importKey(

"spki",

pemToArrayBuffer(pemKey, type),

{

name: "RSASSA-PKCS1-v1_5",

hash: { name: "SHA-256" },

},

false,

["verify"]

);

}

}

Quite a lot of code, let’s break it down. We created four utility functions:

removeLines()– This function will accept a PEM key as an argument and remove the lines from it.base64ToArrayBuffer()– This function will convert a base64 encoded string to an array buffer.pemToArrayBuffer()– This function will accept a base64 encoded PEM certificate as an argument and convert it to an array buffer.convertToCryptoKey()– This function will convert the PEM certificates into CryptoKey objects before we can use them to generate the access and refresh tokens.

Sign and Verify the JSON Web Tokens

Now that we are able to generate the private and public keys with the Web Crypto API, let’s create two utility functions:

signJwt()– This function will sign the token with the appropriate private key.verifyJwt()– This function will verify the token against the corresponding public key.

To begin, create a jwt.ts file in the utils folder and add the following module imports.

src/utils/jwt.ts

import { getNumericDate, create, verify, dotenvConfig } from "../deps.ts";

import type { Payload, Header } from "../deps.ts";

import { convertToCryptoKey } from "./convertCryptoKey.ts";

dotenvConfig({ export: true, path: ".env" });

Sign the JWT

To sign the access and refresh tokens with the private keys stored in the .env file, add the following code to the src/utils/jwt.ts file.

src/utils/jwt.ts

export const signJwt = async ({

user_id,

token_uuid,

issuer,

base64PrivateKeyPem,

expiresIn,

}: {

user_id: string;

token_uuid: string;

issuer: string;

base64PrivateKeyPem: "ACCESS_TOKEN_PRIVATE_KEY" | "REFRESH_TOKEN_PRIVATE_KEY";

expiresIn: Date;

}) => {

const header: Header = {

alg: "RS256",

typ: "JWT",

};

const nowInSeconds = Math.floor(Date.now() / 1000);

const tokenExpiresIn = getNumericDate(expiresIn);

const payload: Payload = {

iss: issuer,

iat: nowInSeconds,

exp: tokenExpiresIn,

sub: user_id,

token_uuid,

};

const cryptoPrivateKey = await convertToCryptoKey({

pemKey: atob(Deno.env.get(base64PrivateKeyPem) as unknown as string),

type: "PRIVATE",

});

const token = await create(header, payload, cryptoPrivateKey!);

return { token, token_uuid };

};

Verify the JWT

To verify the access and refresh tokens with the public keys in the .env file, add the code snippet below to the src/utils/jwt.ts file.

src/utils/jwt.ts

export const verifyJwt = async <T>({

token,

base64PublicKeyPem,

}: {

token: string;

base64PublicKeyPem: "ACCESS_TOKEN_PUBLIC_KEY" | "REFRESH_TOKEN_PUBLIC_KEY";

}): Promise<T | null> => {

try {

const cryptoPublicKey = await convertToCryptoKey({

pemKey: atob(Deno.env.get(base64PublicKeyPem) as unknown as string),

type: "PUBLIC",

});

return (await verify(token, cryptoPublicKey!)) as T;

} catch (error) {

console.log(error);

return null;

}

};

Create the Authentication Route Handlers

In this section, you’ll create route handlers to implement the JSON Web Token authentication. To get started, create a controllers folder in the src directory. In the src/controllers folder, create a auth.controller.ts file and add the following module imports.

src/controllers/auth.controller.ts

import { ObjectId, RouterContext } from "../deps.ts";

import { Bson } from "../deps.ts";

import { User } from "../models/user.model.ts";

import { signJwt, verifyJwt } from "../utils/jwt.ts";

import redisClient from "../utils/connectRedis.ts";

const ACCESS_TOKEN_EXPIRES_IN = 15;

const REFRESH_TOKEN_EXPIRES_IN = 60;

Above, we created ACCESS_TOKEN_EXPIRES_IN and REFRESH_TOKEN_EXPIRES_IN constants to store the access and refresh token expiration time.

SignUp User Controller

Now let’s create a route handler to register new users. This middleware will be called when a POST request is made to the /api/auth/register endpoint.

To add a new user to the database, we’ll extract the JSON object from the request body and provide it to the User.insertOne() method. The User.insertOne() method will then query the database, insert the new document and return the ObjectId of the newly-created document.

src/controllers/auth.controller.ts

const signUpUserController = async ({

request,

response,

}: RouterContext<string>) => {

try {

const {

name,

email,

password,

}: { name: string; email: string; password: string } = await request.body()

.value;

const createdAt = new Date();

const updatedAt = createdAt;

const userId: string | Bson.ObjectId = await User.insertOne({

name,

email: email.toLowerCase(),

password,

createdAt,

updatedAt,

});

if (!userId) {

response.status = 500;

response.body = { status: "error", message: "Error creating user" };

return;

}

const user = await User.findOne({ _id: userId });

response.status = 201;

response.body = {

status: "success",

user,

};

} catch (error) {

if ((error.message as string).includes("E11000")) {

response.status = 409;

response.body = {

status: "fail",

message: "A user with that email already exists",

};

return;

}

response.status = 500;

response.body = { status: "error", message: error.message };

return;

}

};

Since the .insertOne() method only returns an ObjectId instead of the complete document, we had to call the User.findOne() method to retrieve the document belonging to the ObjectId.

Login User Controller

Here, let’s create a route middleware to sign users into the API. This middleware will be evoked when a POST request is made to the /api/auth/login endpoint.

To log the user into the API, we’ll extract the email and password from the request body and query the database to check if a user with that email address exists.

If a user exists, we’ll leverage the signJwt() utility function to generate the access and refresh tokens using the private keys stored in the .env file. Then, we’ll store the metadata of both the access and refresh tokens in the Redis database.

src/controllers/auth.controller.ts

const loginUserController = async ({

request,

response,

cookies,

}: RouterContext<string>) => {

try {

const { email, password }: { email: string; password: string } =

await request.body().value;

const message = "Invalid email or password";

const userExists = await User.findOne({ email: email.toLowerCase() });

if (!userExists) {

response.status = 401;

response.body = {

status: "fail",

message,

};

return;

}

const accessTokenExpiresIn = new Date(

Date.now() + ACCESS_TOKEN_EXPIRES_IN * 60 * 1000

);

const refreshTokenExpiresIn = new Date(

Date.now() + REFRESH_TOKEN_EXPIRES_IN * 60 * 1000

);

const { token: access_token, token_uuid: access_uuid } = await signJwt({

user_id: String(userExists._id),

token_uuid: crypto.randomUUID(),

base64PrivateKeyPem: "ACCESS_TOKEN_PRIVATE_KEY",

expiresIn: accessTokenExpiresIn,

issuer: "website.com",

});

const { token: refresh_token, token_uuid: refresh_uuid } = await signJwt({

user_id: String(userExists._id),

token_uuid: crypto.randomUUID(),

base64PrivateKeyPem: "REFRESH_TOKEN_PRIVATE_KEY",

expiresIn: refreshTokenExpiresIn,

issuer: "website.com",

});

await Promise.all([

redisClient.set(access_uuid, String(userExists._id), {

ex: ACCESS_TOKEN_EXPIRES_IN * 60,

}),

redisClient.set(refresh_uuid, String(userExists._id), {

ex: REFRESH_TOKEN_EXPIRES_IN * 60,

}),

]);

cookies.set("access_token", access_token, {

expires: accessTokenExpiresIn,

maxAge: ACCESS_TOKEN_EXPIRES_IN * 60,

httpOnly: true,

secure: false,

});

cookies.set("refresh_token", refresh_token, {

expires: refreshTokenExpiresIn,

maxAge: REFRESH_TOKEN_EXPIRES_IN * 60,

httpOnly: true,

secure: false,

});

response.status = 200;

response.body = { status: "success", access_token };

} catch (error) {

response.status = 500;

response.body = { status: "error", message: error.message };

return;

}

};

After that, the access and refresh tokens will be added to the Response Cookies object and sent to the client as HTTPOnly cookies.

Finally, only the access token will be sent in the JSON response so that the user can include it in the Authorization header for subsequent requests that require authentication.

Refresh Access Token Controller

Now that we’re able to sign a user into the API, let’s create a route handler to refresh their access token when it expires. This route controller will be called when a GET request is made to the /api/auth/refresh endpoint.

To refresh the access token, we’ll extract the refresh token from the Request Cookies object and call the verifyJwt() function to check if the token was not manipulated.

If the refresh token is valid, we’ll query the Redis database to check if the metadata belonging to the token still exists. Suppose the user has a valid session, the User.findOne() method will be evoked to check if the user belonging to the token still exists.

src/controllers/auth.controller.ts

const refreshAccessTokenController = async ({

response,

cookies,

}: RouterContext<string>) => {

try {

const refresh_token = await cookies.get("refresh_token");

const message = "Could not refresh access token";

if (!refresh_token) {

response.status = 403;

response.body = {

status: "fail",

message,

};

return;

}

const decoded = await verifyJwt<{ sub: string; token_uuid: string }>({

token: refresh_token,

base64PublicKeyPem: "REFRESH_TOKEN_PUBLIC_KEY",

});

if (!decoded) {

response.status = 403;

response.body = {

status: "fail",

message,

};

return;

}

const user_id = await redisClient.get(decoded.token_uuid);

if (!user_id) {

response.status = 403;

response.body = {

status: "fail",

message,

};

return;

}

const user = await User.findOne({ _id: new ObjectId(user_id) });

if (!user) {

response.status = 403;

response.body = {

status: "fail",

message,

};

return;

}

const accessTokenExpiresIn = new Date(

Date.now() + ACCESS_TOKEN_EXPIRES_IN * 60 * 1000

);

const { token: access_token, token_uuid: access_uuid } = await signJwt({

user_id: decoded.sub,

issuer: "website.com",

token_uuid: crypto.randomUUID(),

base64PrivateKeyPem: "ACCESS_TOKEN_PRIVATE_KEY",

expiresIn: accessTokenExpiresIn,

});

await redisClient.set(access_uuid, String(user._id), {

ex: ACCESS_TOKEN_EXPIRES_IN * 60,

});

cookies.set("access_token", access_token, {

expires: accessTokenExpiresIn,

maxAge: ACCESS_TOKEN_EXPIRES_IN * 60,

httpOnly: true,

secure: false,

});

response.status = 200;

response.body = { status: "success", access_token };

} catch (error) {

response.status = 500;

response.body = { status: "error", message: error.message };

return;

}

};

Upon successful authentication, the signJwt() function will be called to generate a new access token. Then, the redisClient.set() method will be evoked to store the token’s metadata in the Redis database.

Finally, the access token will be returned to the client as an HTTPOnly cookie. Also, it will be added to the JSON response.

Logout User Controller

Here, you’ll create a route controller that will be evoked to log the user out of the API. This route handler will be called when the server receives a GET request on the /api/auth/logout endpoint.

To sign out the user, we’ll extract the refresh token from the Request Cookies object and call the verifyJwt() method to check if the token is valid.

Suppose the token has not expired and was not manipulated, we’ll call the redisClient.del() method to delete the metadata belonging to the access and refresh tokens.

src/controllers/auth.controller.ts

const logoutController = async ({

state,

response,

cookies,

}: RouterContext<string>) => {

try {

const refresh_token = await cookies.get("refresh_token");

const message = "Token is invalid or session has expired";

if (!refresh_token) {

response.status = 403;

response.body = {

status: "fail",

message,

};

return;

}

const decoded = await verifyJwt<{ sub: string; token_uuid: string }>({

token: refresh_token,

base64PublicKeyPem: "REFRESH_TOKEN_PUBLIC_KEY",

});

if (!decoded) {

response.status = 403;

response.body = {

status: "fail",

message,

};

return;

}

await redisClient.del(decoded?.token_uuid, state.access_uuid);

cookies.set("access_token", "", {

httpOnly: true,

secure: false,

maxAge: -1,

});

cookies.set("refresh_token", "", {

httpOnly: true,

secure: false,

maxAge: -1,

});

response.status = 200;

response.body = { status: "success" };

} catch (error) {

response.status = 500;

response.body = { status: "error", message: error.message };

}

};

export default {

signUpUserController,

loginUserController,

logoutController,

refreshAccessTokenController,

};

After that, we’ll send expired cookies with the same key names as the access and refresh tokens in order to delete the existing ones in the browser or API client.

Get me Controller

Let’s create a route handler that Oak will evoke to return the authenticated user’s credentials to the client or frontend app. This route handler will be protected by a middleware guard and only users with valid access tokens can access it.

src/controllers/user.controller.ts

import type { RouterContext } from "../deps.ts";

import { User } from "../models/user.model.ts";

const getMeController = async ({ state, response }: RouterContext<string>) => {

try {

const user = await User.findOne({ _id: state.user_id });

if (!user) {

response.status = 401;

response.body = {

status: "fail",

message: "The user belonging to this token no longer exists",

};

return;

}

response.status = 200;

response.body = {

status: "success",

user,

};

} catch (error) {

response.status = 500;

response.body = {

status: "error",

message: error.message,

};

return;

}

};

export default { getMeController };

Above, we extracted the user_id from the Oak state object, queried the database to retrieve the user that matches the query, and returned the found document to the client.

Create an Auth Middleware Guard

Here, we’ll create an authentication middleware guard that will ensure that a valid access token is provided before the next middleware in the middleware stack is called to process the request.

Create a middleware/requireUser.ts file in the src directory and add the code snippets below. To make the authentication flow flexible, we’ll configure the middleware guard to extract the access token from either the Authorization header or Request Cookies object.

src/middleware/requireUser.ts

import { Context, ObjectId } from "../deps.ts";

import { User } from "../models/user.model.ts";

import redisClient from "../utils/connectRedis.ts";

import { verifyJwt } from "../utils/jwt.ts";

const requireUser = async (ctx: Context, next: () => Promise<unknown>) => {

try {

const headers: Headers = ctx.request.headers;

const authorization = headers.get("Authorization");

const cookieToken = await ctx.cookies.get("access_token");

let access_token;

if (authorization) {

access_token = authorization.split(" ")[1];

} else if (cookieToken) {

access_token = cookieToken;

}

if (!access_token) {

ctx.response.status = 401;

ctx.response.body = {

status: "fail",

message: "You are not logged in",

};

return;

}

const decoded = await verifyJwt<{ sub: string; token_uuid: string }>({

token: access_token,

base64PublicKeyPem: "ACCESS_TOKEN_PUBLIC_KEY",

});

const message = "Token is invalid or session has expired";

if (!decoded) {

ctx.response.status = 401;

ctx.response.body = {

status: "fail",

message,

};

return;

}

const user_id = await redisClient.get(decoded.token_uuid);

if (!user_id) {

ctx.response.status = 401;

ctx.response.body = {

status: "fail",

message,

};

return;

}

const userExists = await User.findOne({ _id: new ObjectId(user_id) });

if (!userExists) {

ctx.response.status = 401;

ctx.response.body = {

status: "fail",

message: "The user belonging to this token no longer exists",

};

return;

}

ctx.state["user_id"] = userExists._id;

ctx.state["access_uuid"] = decoded.token_uuid;

await next();

delete ctx.state.user_id;

delete ctx.state.access_uuid;

} catch (error) {

ctx.response.status = 500;

ctx.response.body = {

status: "fail",

message: error.message,

};

}

};

export default requireUser;

- We extracted the access token from either the Authorization header or Request Cookies object and assigned it to the

access_tokenvariable. - Then, we called the

verifyJwt()function to verify the access token against the public key stored in the .env file. - Then, we queried the Redis database to check if the metadata belonging to the access token still exists. Redis will then return the user’s ID if the token’s metadata exists.

- Next, we provided the user_id returned by Redis to the

User.findOne()method to check if the user belonging to the access token still exists in the MongoDB database. - Assuming there weren’t any errors, the user_id and access_uuid will be added to the Oak state object before the next middleware will be called to handle the request.

Create the API Routes

Now that we’ve created all the authentication middleware handlers, let’s create the API routes to evoke them. In the src directory, create a routes/auth.routes.ts file and add the code below.

src/routes/auth.routes.ts

import { Router } from "../deps.ts";

import authController from "../controllers/auth.controller.ts";

import requireUser from "../middleware/requireUser.ts";

const router = new Router();

router.post<string>("/register", authController.signUpUserController);

router.post<string>("/login", authController.loginUserController);

router.get<string>("/logout", requireUser, authController.logoutController);

router.get<string>("/refresh", authController.refreshAccessTokenController);

export default router;

Above, we created an Oak router by instantiating the Router class and assigned the instance to the router constant. Then, we defined the authentication routes for the respective route handlers.

The requireUser() authentication guard we added to the /logout route will ensure that only authenticated users can access it.

With that out of the way, let’s create a router to return the authenticated user’s credentials to the client. To achieve this, create a user.routes.ts file in the src/routes folder and add the code below.

src/routes/user.routes.ts

import { Router } from "../deps.ts";

import userController from "../controllers/user.controller.ts";

import requireUser from "../middleware/requireUser.ts";

const router = new Router();

router.get<string>("/me", requireUser, userController.getMeController);

export default router;

We added a /me route to the Oak middleware pipeline and protected it with the requireUser authentication guard.

Finally, let’s create an init() function to register the routes. So, create an index.ts file in the src/routes folder and add the following code.

src/routes/index.ts

import { Application } from "../deps.ts";

import authRouter from "./auth.routes.ts";

import userRouter from "./user.routes.ts";

function init(app: Application) {

app.use(authRouter.prefix("/api/auth/").routes());

app.use(userRouter.prefix("/api/users/").routes());

}

export default {

init,

};

Register the API Router

Now let’s import the init() function defined in the src/routes/index.ts file and evoke it to register all the routes. To do that, replace the content of the src/server.ts file with the following code.

src/server.ts

import { Application, Router, logger, oakCors } from "./deps.ts";

import type { RouterContext } from "./deps.ts";

import appRouter from "./routes/index.ts";

const app = new Application();

const router = new Router();

// Middleware Logger

app.use(logger.default.logger);

app.use(logger.default.responseTime);

// Health checker

router.get<string>("/api/healthchecker", (ctx: RouterContext<string>) => {

ctx.response.status = 200;

ctx.response.body = {

status: "success",

message:

"JWT Refresh Access Tokens in Deno with RS256 Algorithm",

};

});

app.use(

oakCors({

origin: /^.+localhost:(3000|3001)$/,

optionsSuccessStatus: 200,

credentials: true,

})

);

appRouter.init(app);

app.use(router.routes());

app.use(router.allowedMethods());

app.addEventListener("listen", ({ port, secure }) => {

console.info(

`🚀 Server started on ${secure ? "https://" : "http://"}localhost:${port}`

);

});

const port = 8000;

app.listen({ port });

In the above, we configured the Deno HTTP server with CORS so that it can accept requests from cross-origin domains.

Finally, make sure the Redis and MongoDB Docker containers are running, and run the command below to start the Deno HTTP server.

denon run --allow-net --allow-read --allow-write --allow-env src/server.ts

Test the JWT Authentication API

We are now ready to test the Deno API endpoints with a front-end application or API testing software. In my case, I’ll be using Postman. To have access to the same collection used in testing the API, download the project source code from GitHub and import the Deno_MongoDB.postman_collection.json file into your Postman application.

Alternatively, you can download the Thunder Client extension and import the Deno_MongoDB.postman_collection.json file to have access to the collection.

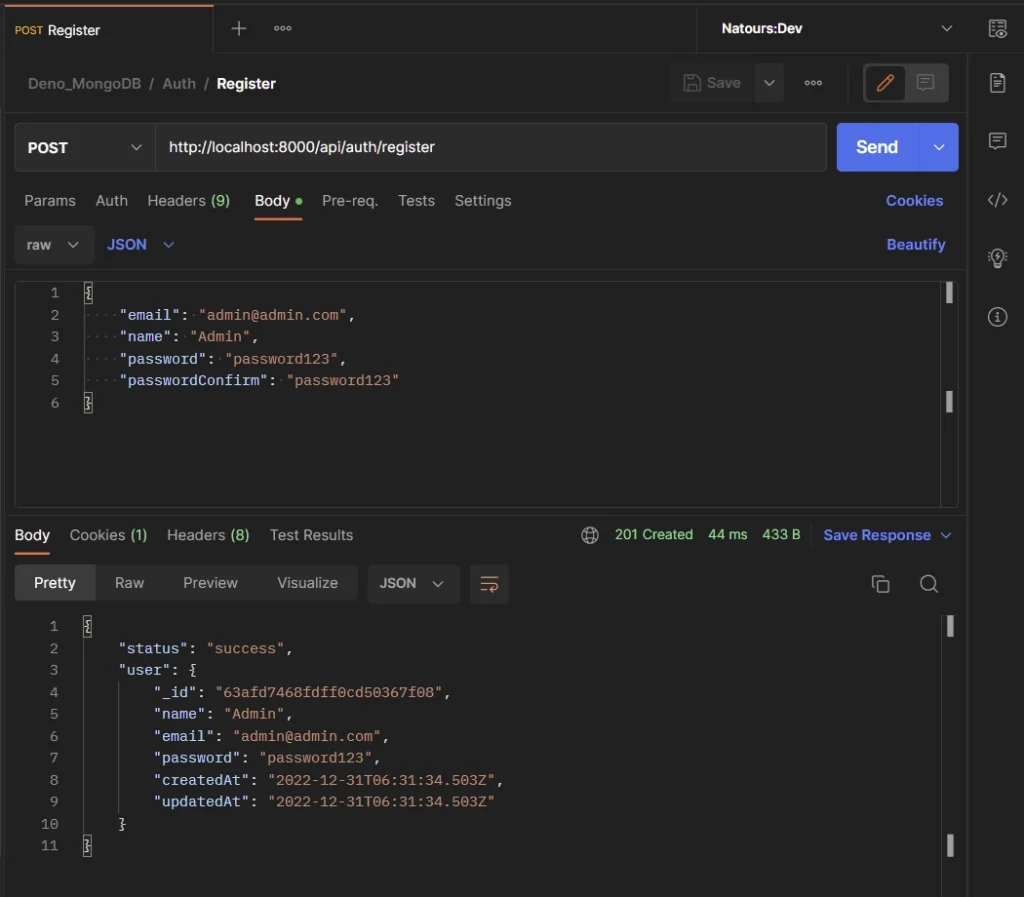

SignUp User

To register for an account, add the JSON object below to the request body in Postman or Thunder Client and make a POST request to the http://localhost:8000/api/auth/register endpoint.

{

"email": "admin@admin.com",

"name": "Admin",

"password": "password123",

"passwordConfirm": "password123"

}

The Deno API will receive the request, extract the JSON object from the request body and insert the new user document into the MongoDB database. After that, it will return the newly-created document to the client in the JSON response.

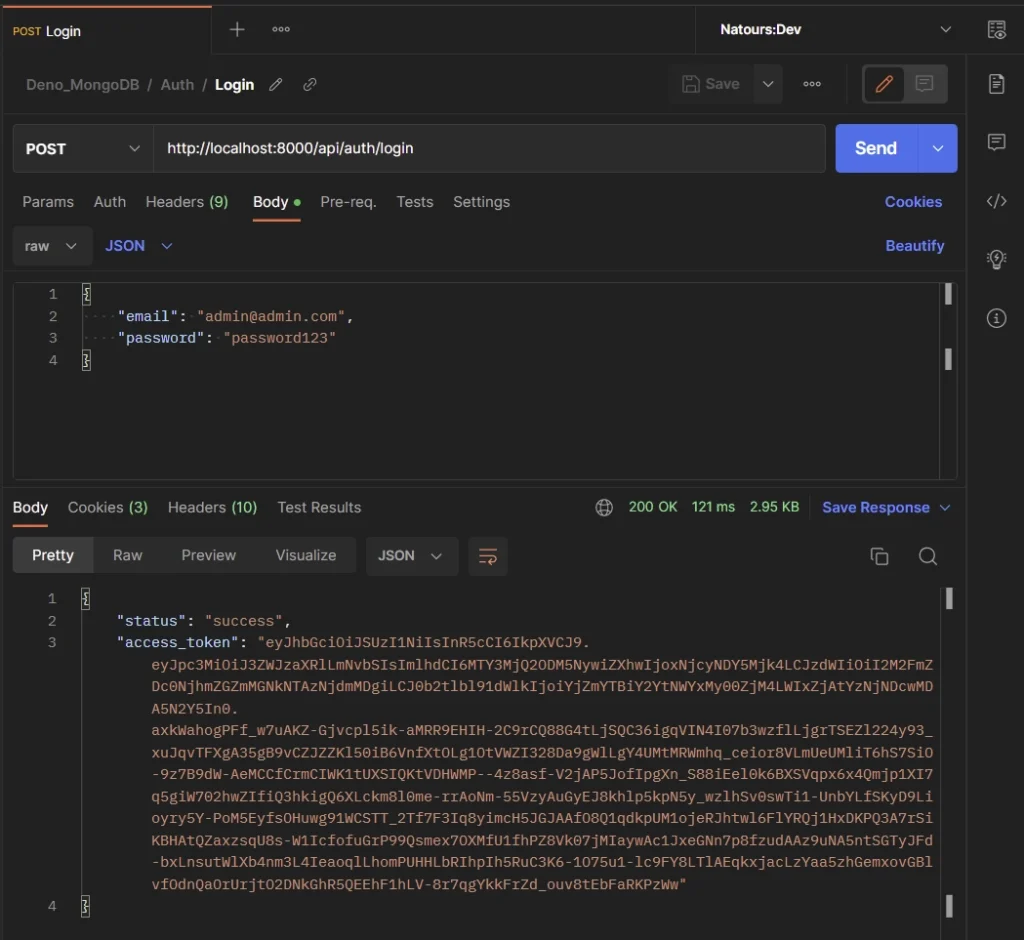

SignIn User

To sign into the API, add your email and password to the JSON object, and make a POST request to the http://localhost:8000/api/auth/login endpoint.

{

"email": "admin@admin.com",

"password": "password123"

}

Upon successful authentication, the server will generate access and refresh tokens with the private keys stored in the .env file and return them to the client as HTTP Only cookies.

To make the authentication flow flexible, the server will also include the access token in the JSON response so that the user can add it to the Authorization header upon subsequent requests that require authentication.

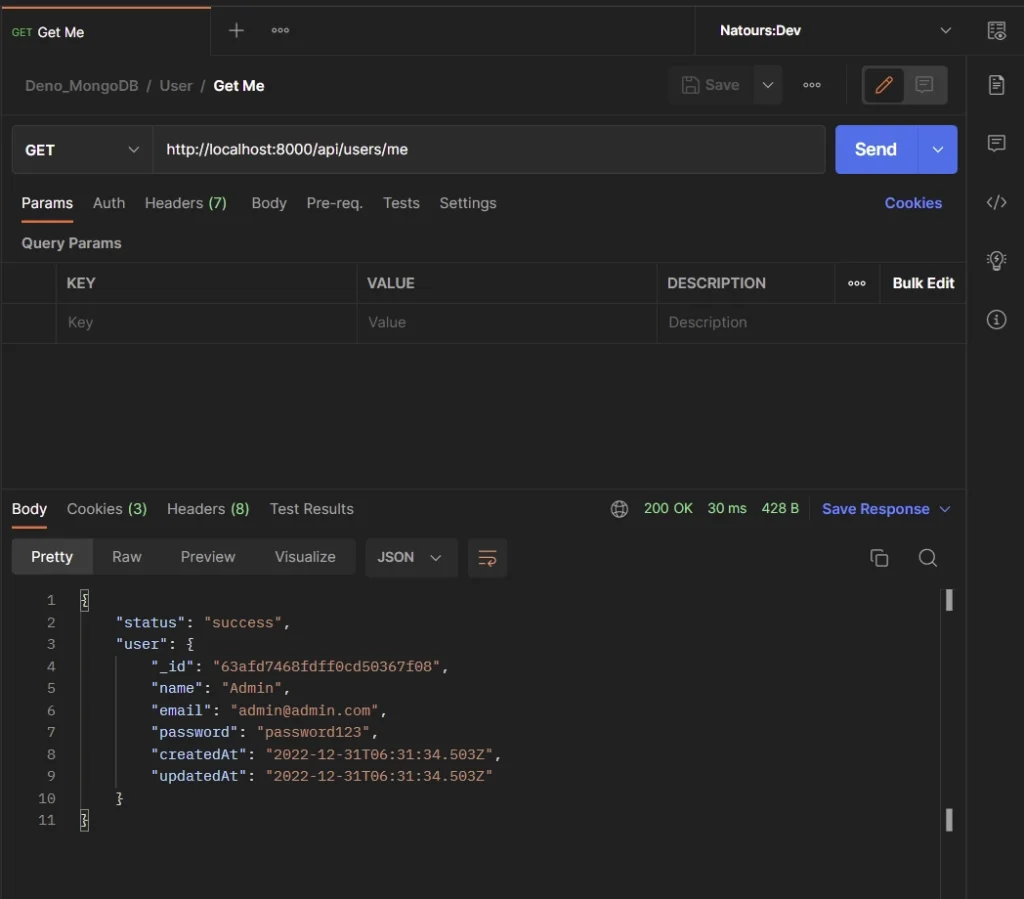

Get Profile Information

You must have a valid access token before you can retrieve your profile information from the Deno API. That means, the /api/users/me route is protected and only authenticated users can access it.

When you log into the API, the server will return an access token that can be used to access protected routes. To obtain your account credentials from the API, make a GET request to the http://localhost:8000/api/users/me endpoint and Postman will automatically include the cookies along with the request.

Alternatively, you can manually add the access token to the Authorization header as Bearer before making the request to the API.

The server will then extract the access token from either the Authorization header or Cookies object and validate it against the public key stored in the .env file.

Once valid, other authentication methods will be performed before the profile information will be obtained from MongoDB and returned in the JSON response.

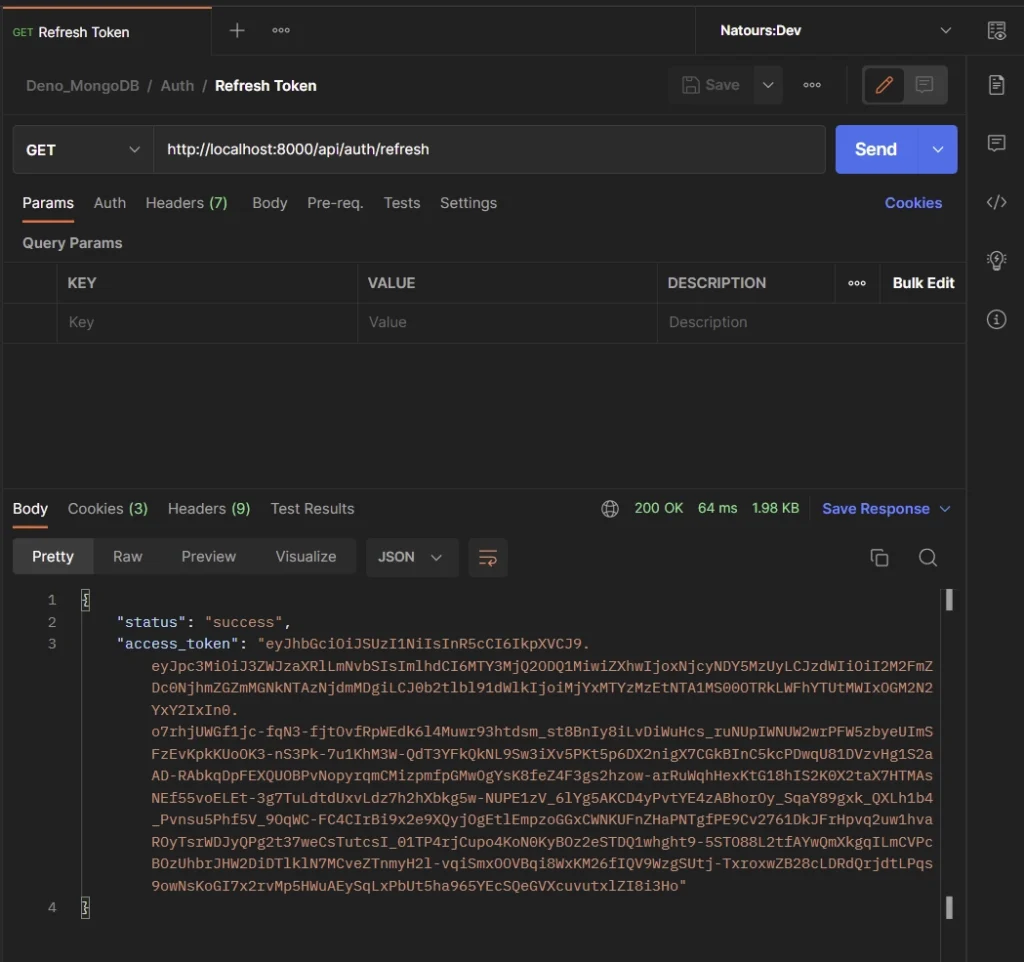

Refresh Access Token

To obtain a new access token from the API, make sure you have a valid refresh token cookie and make a GET request to the http://localhost:8000/api/auth/refresh endpoint. The server expects the refresh token to be included in the request Cookies object instead of the Authorization header.

When the server receives the request, it’ll extract the refresh token from the request Cookies object and validate it against the public key stored in the .env file.

If the refresh token is valid, the server will generate a new access token and return it to the client as an HTTP Only cookie. The server will also add the access token to the JSON response for those who prefer the Authorization header option.

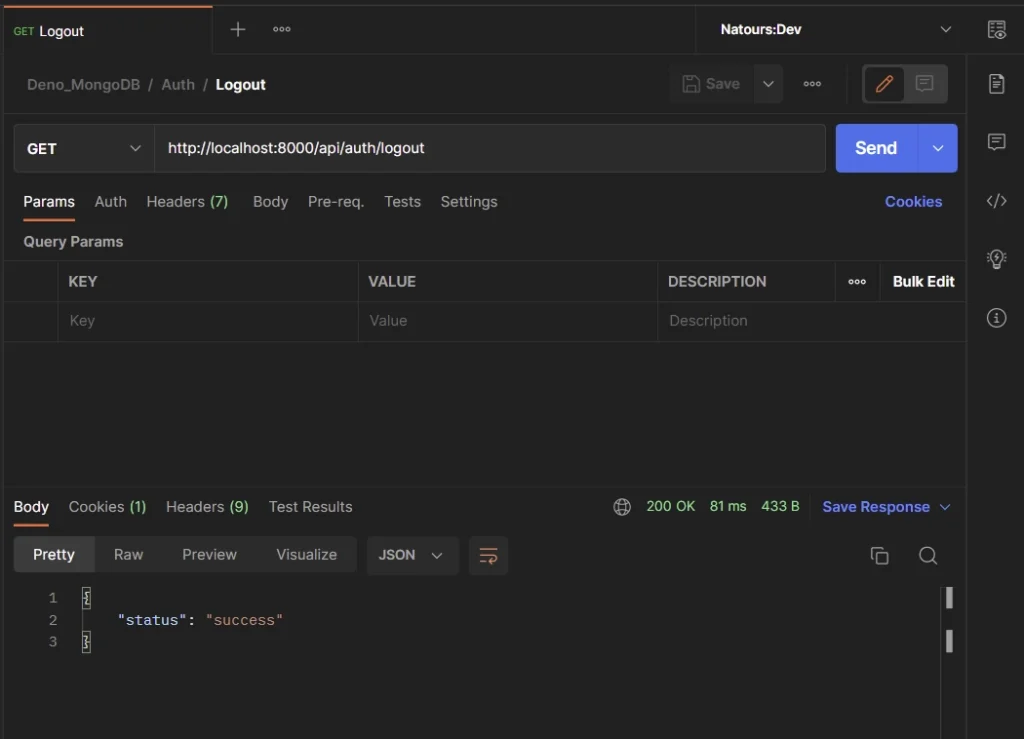

Logout User

To log out of the API, make sure you have a valid access token and make a GET request to the http://localhost:8000/api/auth/logout endpoint.

The server will then delete the user’s session from the Redis database and send expired cookies to delete the existing ones in the browser or the API testing software.

Conclusion

In this article, you learned how to refresh access tokens the right way in Deno. In addition, you learned how to generate private and public keys with the Web Crypto API.

You can find the complete source code of the Deno project on GitHub.